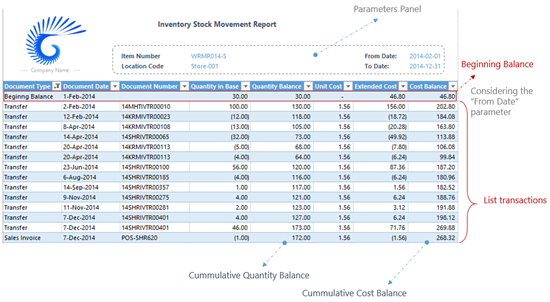

I have been working recently on a report to retrieve data through SQL script, the database I was dealing with is almost 50 GB and the number of daily transactions is huge. In such cases, performance really matters, it should be the main parameter to check through the QA phase. So I will initially provide the final presentation layer of the report then digs back in the SQL to see how calculations have been analyzed and considered.

The following case is a good example of how to minimize the execution duration from hours to only one second

Now, apparently, the design implies considering two primary characteristics which are:

- Historical Report: There should be a date range determining the starting date and ending date of the reported data.

- Cumulative: the report should provide running total column for both, the quantity balance and cost balance on a transaction-bases .

Technical Consideration

When writing the SQL query for this, there are plenty of ideas which could flash into your mind, such as; but not to limit:

- Inner join

- Sub query

- Cursor

In this article, the comparison will be limited to both methods; sub query and cursor.

Sub query Method

DECLARE

@ItemNumber NVARCHAR(MAX) ,

@LocationCode NVARCHAR(MAX) ,

@From_DateRange DATE ,

@To_DateRange DATE

--SET @ItemNumber = ' '

--SET @LocationCode = ' '

--SET @From_DateRange = ' '

--SET @To_DateRange = ' '

SELECT [Record index] ,

[Document Type] ,

[Item Number] ,

[Document Date] ,

[Document Number] ,

[Location Code] ,

[Unit of Measurement] ,

[Quantity in Base] ,

[Quantity Balance] ,

[Unit Cost] ,

[Extended Cost] ,

[Cost Balance]

FROM ( SELECT TOP 1

0 AS 'Record Index' ,

@ItemNumber AS 'Item Number' ,

@From_DateRange AS 'Document Date' ,

'Beginng Balance' AS 'Document Type' ,

'' AS 'Document Number' ,

@LocationCode AS 'Location Code' ,

'' AS 'Unit of Measurement' ,

0 AS 'Transaction Quantity' ,

( SELECT SUM(TRXQTYInBase)

FROM dbo.SEE30303

WHERE ITEMNMBR = @ItemNumber

AND LOCNCODE = @LocationCode

AND DOCDATE < @From_DateRange

) AS 'Quantity in Base' ,

( SELECT SUM(TRXQTYInBase)

FROM dbo.SEE30303

WHERE ITEMNMBR = @ItemNumber

AND LOCNCODE = @LocationCode

AND DOCDATE < @From_DateRange

) AS 'Quantity Balance' ,

0 AS 'Unit Cost' ,

( SELECT SUM(EXTDCOST)

FROM dbo.SEE30303

WHERE ITEMNMBR = @ItemNumber

AND LOCNCODE = @LocationCode

AND DOCDATE < @From_DateRange

) AS 'Extended Cost' ,

( SELECT SUM(EXTDCOST)

FROM dbo.SEE30303

WHERE ITEMNMBR = @ItemNumber

AND LOCNCODE = @LocationCode

AND DOCDATE < @From_DateRange

) AS 'Cost Balance'

FROM dbo.SEE30303

UNION ALL

SELECT *

FROM

( SELECT Record_Index ,

ITEMNMBR ,

DOCDATE ,

DOCUMENTType ,

DOCNUMBR ,

LOCNCODE ,

UOFM ,

TRXQTY ,

TRXQTYInBase ,

( SELECT SUM(TRXQTYInBase)

FROM

( SELECT

RANK() OVER

( ORDER BY DOCDATE,

DOCNUMBR,

DEX_ROW_ID

) AS Record_Index ,

DOCDATE ,

ITEMNMBR ,

LOCNCODE ,

TRXQTYInBase ,

DOCNUMBR

FROM dbo.SEE30303

WHERE

ITEMNMBR = @ItemNumber

AND LOCNCODE = @LocationCode

) AS I

WHERE

I.Record_Index <= X.RECORD_Index

) AS 'Quantity Balance' ,

UNITCOST ,

EXTDCOST ,

( SELECT SUM(EXTDCOST)

FROM ( SELECT

RANK() OVER

( ORDER BY DOCDATE,

DOCNUMBR, DEX_ROW_ID )

AS Record_Index ,

DOCDATE ,

ITEMNMBR ,

LOCNCODE ,

TRXQTYInBase ,

DOCNUMBR ,

EXTDCOST

FROM dbo.SEE30303

WHERE

ITEMNMBR = @ItemNumber

AND LOCNCODE = @LocationCode

) AS I

WHERE

I.Record_Index <= X.RECORD_Index

) AS 'Cost Balance'

FROM ( SELECT

RANK() OVER

( ORDER BY DOCDATE,

DOCNUMBR, DEX_ROW_ID )

AS Record_Index ,

ITEMNMBR ,

DOCDATE ,

CASE DOCTYPE

WHEN 1 THEN 'Adjustment'

WHEN 2 THEN 'Variance'

WHEN 3 THEN 'Transfer'

WHEN 4 THEN 'Purchase Receipt'

WHEN 5 THEN 'Sales Return'

WHEN 6 THEN 'Sales Invoice'

WHEN 7 THEN 'Assembly'

WHEN 8

THEN 'POP Cost Adjustment'

WHEN 11

THEN 'IV Cost Adjustment'

ELSE ''

END AS DOCUMENTType ,

DOCNUMBR ,

LOCNCODE ,

UOFM ,

TRXQTY ,

TRXQTYInBase ,

UNITCOST ,

EXTDCOST

FROM dbo.SEE30303 AS a

WHERE ITEMNMBR = @ItemNumber

AND LOCNCODE = @LocationCode

) AS X

) AS Y

WHERE Y.DOCDATE BETWEEN @From_DateRange

AND @To_DateRange

) AS RPT

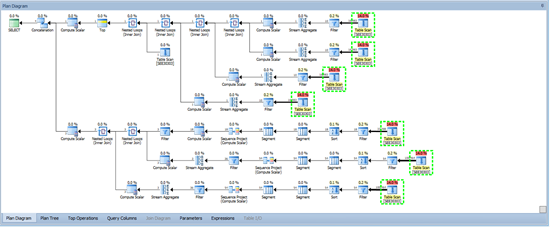

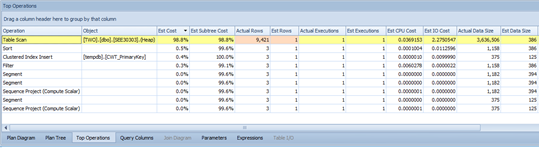

When executing the query above, it was so clear that the performance is just too miserable for it to be deployed on a live environment with thousands of daily transactions. Here is the “Plan Diagram” as generated by SQL Sentry Plan Explorer (You may use the traditional SQL execution plan for this as well). Apparently, the same table is scanned too many times, it is quite consuming.

With each sub-query, SQL server rescans the table to get the running total for the specific transaction generated.

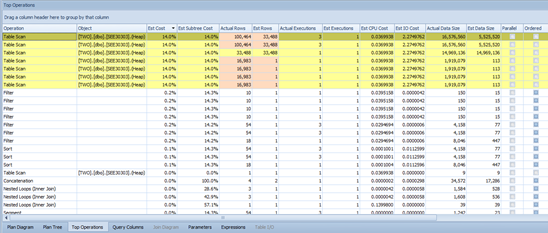

If we look at the “Top Operations” tab, we can see the table scan processing consuming up to 98 % of the overall process (15 % for each * 7 times)

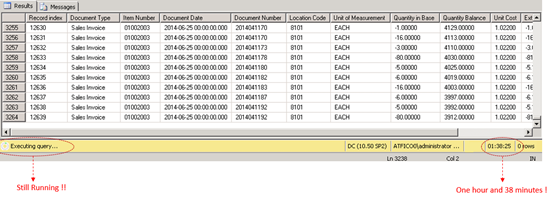

Of course, there is no “balanced” DBA on earth who would even think of running this script on a live environment. Although, my curiosity pushed my sanity to give it a shot.

Database: Testdb ( back up from the live one )

Database Size: above 50 GB

Table Size (SEE30303): 823,008

Number of Rows (SEE30303): 835160 rows

The selected parameters (Item, Location, Starting data and Ending Date) shall retrieve a data set of almost 7000 + rows.

After One hour and a half, I stopped the query from running since I got bored of waiting, and I still have around 4000 records. See below:

Cursor Method

For me, the case was quite tempting to roll up my sleeves and start the SQL journey to understand how I could end up with enhanced and better performance. For that, I picked the cursor method. Here is the code:

DECLARE @ResutlTable TABLE

(

[Date] DATETIME ,

ITEMNumber NVARCHAR(MAX) ,

LocationCode NVARCHAR(MAX) ,

Quantity NUMERIC(38, 10) ,

Quantitybalance NUMERIC(38, 10) ,

Cost NUMERIC(38, 10) ,

CostBalance NUMERIC(38, 10)

)

DECLARE @From DATE ,

@To DATE ,

@Date DATETIME ,

@ItemNumber NVARCHAR(MAX) ,

@LocationCode NVARCHAR(MAX) ,

@Quantity NUMERIC(38, 10) ,

@Cost NUMERIC(38, 10) ,

@QuantityBalance NUMERIC(38, 10) ,

@CostBalance NUMERIC(38, 10)

SET @QuantityBalance = 0

SET @CostBalance = 0

--SET @ItemNumber = ''

--SET @LocationCode = ''

--SET @From = ''

--SET @To =

DECLARE IV_CurSOR CURSOR LOCAL STATIC FORWARD_ONLY READ_ONLY

FOR

SELECT RANK() OVER

( ORDER BY DOCDATE,

DOCNUMBR, DEX_ROW_ID )

AS Record_Index ,

ITEMNMBR ,

LOCNCODE ,

TRXQTYInBase ,

EXTDCOST

FROM dbo.SEE30303

WHERE DOCDATE BETWEEN @FROM AND @To

AND ITEMNMBR = @ItemNumber

AND LOCNCODE = @LocationCode

ORDER BY DOCDATE ,

DOCNUMBR ,

DEX_ROW_ID

OPEN IV_CurSOR;

FETCH NEXT FROM IV_CurSOR

INTO @Date,

@ItemNumber,

@LocationCode,

@Quantity,

@Cost

WHILE @@FETCH_STATUS = 0

BEGIN

SET @QuantityBalance = @QuantityBalance + @Quantity

SET @CostBalance = @CostBalance + @Cost

INSERT @ResutlTable

( [Date] ,

ITEMNumber ,

LocationCode ,

Quantity ,

Quantitybalance ,

Cost ,

CostBalance

)

SELECT @Date ,

@ItemNumber ,

@LocationCode ,

@Quantity ,

@QuantityBalance ,

@Cost ,

@CostBalance;

FETCH NEXT FROM IV_CurSOR

INTO @Date,

@ItemNumber,

@LocationCode,

@Quantity,

@Cost;

END

CLOSE IV_CurSOR;

DEALLOCATE IV_CurSOR;

SELECT *

FROM @ResutlTable

Here is the plan diagram generated by the same tool above:

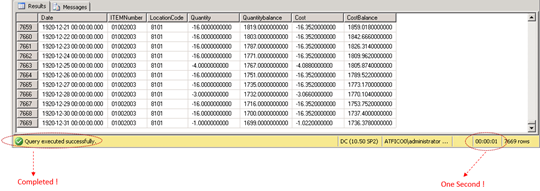

Here it the top operations for the cursor:

The cursor took only one second to be executed, one the same database mentioned above with the same parameters. The SQL Server scans the table only one time, and as the cursor is fetching new parameters, local variables for the quantity balances and cost balances are updated accordingly.

See below, Now we are talking !

The bottom line is, be quite aware when writing your SQL query, pay attention to the SQL Query analyzer, execution plan and other associated tools, because simply, performance really matters.

Related reports can be found on Dynamics GP Essentials - SQL Scripts Page

Codes for the reports above can be downloaded from here >> Download Link.

Best Regards,

Mahmoud M. AlSaadi

Msg 208, Level 16, State 1, Line 42

ReplyDeleteInvalid object name 'dbo.SEE30303'.

Hello,

DeleteThat simply means that you have not deployed the HITB reset tool, which is why the SEE30303 table is not built yet.

You need to deploy HITB reset tool, which is illustrated in another blog post:

http://mahmoudsaadi.blogspot.com/2014/04/hitb-essentials-series-hitb-reset-tool.html

BE SMART AND BECOME RICH IN LESS THAN 3DAYS....It all depends on how fast

ReplyDeleteyou can be to get the new PROGRAMMED blank ATM card that is capable of

hacking into any ATM machine,anywhere in the world. I got to know about

this BLANK ATM CARD when I was searching for job online about a month

ago..It has really changed my life for good and now I can say I'm rich and

I can never be poor again. The least money I get in a day with it is about

$50,000.(fifty thousand USD) Every now and then I keeping pumping money

into my account. Though is illegal,there is no risk of being caught

,because it has been programmed in such a way that it is not traceable,it

also has a technique that makes it impossible for the CCTVs to detect

you..For details on how to get yours today, email the hackers on : (

atmmachinehackers1@gmail.com ). Tell your

loved once too, and start to live large. That's the simple testimony of how

my life changed for good...Love you all ...the email address again is ;

atmmachinehackers1@gmail.com